Meanwhile, at Google…

While government continues to hash out the same, tired issues grounded in individual versus collective rights, equality and what that entitles, and how to implement policies of distributive justice sans agreement and seldom making progress (good job, SCOTUS, on Obergefell), the scientific community is moving full-speed ahead—and the resource-rich tech industry is moving doubly fast, with solutions that may be able to render some of those debates obsolete.

Google’s “Research Blog” published a widely shared article last year about the progress of their work on artificial neural networks for image recognition—in particular, a method they’ve code-named “Inception.” (According to the corresponding journal paper, “Going Deeper with Convolutions,” published at the open access arXiv.org, it echoes the famous “We Need To Go Deeper” Internet meme.) While image recognition software isn’t new (and indeed optical character recognition—the conversion of images to text—has been around since the 1970s), such programs have not been particularly complex. They usually work at a single level—searching for specific visual cues and patterns. (For a laugh, see Churchix, software built for church assemblies to monitor attendance through facial recognition.)

Google’s software stems from work in deep learning, a branch of machine learning that involves multilayer algorithms that attempt to extract more abstract concepts from processing, and is considered one step closer to artificial general intelligence (AGI).

Inceptionism is what’s known as a convolutional neural network, a type of neural architecture in which a primary layer of neurons respond to overlapping parts of visual space and sends information signals through multiple levels of neurons that further synthesize and sort the information into what is useful and relevant to our attention, mimicking the way in which biological information processing (like our visual perception) works. In this case, Google Research bloggers say that images are “fed into the input layer” of artificial neurons and progress through each layer (there can be 10-30 stacked layers) until they reach the output layer that “makes a decision on what the image shows.” It turns out this decision is not so straightforward. By feeding the network millions of images and adjusting what each layer of neurons looks for (for example: edges, corners, shapes, colors, specific combinations of features, etc.), we can get the network to give us the output we are looking for. In other words, we can train a network to find Waldo the way we would go about looking for Waldo—by looking for the red and white stripes, and, if need be, his glasses, hair, and facial expression.

And we can make this network more sophisticated. By training the network to process and decide on different types of images, we can determine what it considers as the defining features of each image in order to determine whether its definition is accurate. For example, the Google Research bloggers showed an example of the output from a neural network looking for dumbbells, in which the neural network’s definition of a dumbbell came with the inaccurate defining characteristic of including a floating muscular arm:

(Photo via Google Research Blog)

But the coolest part is why its code-named Inception.

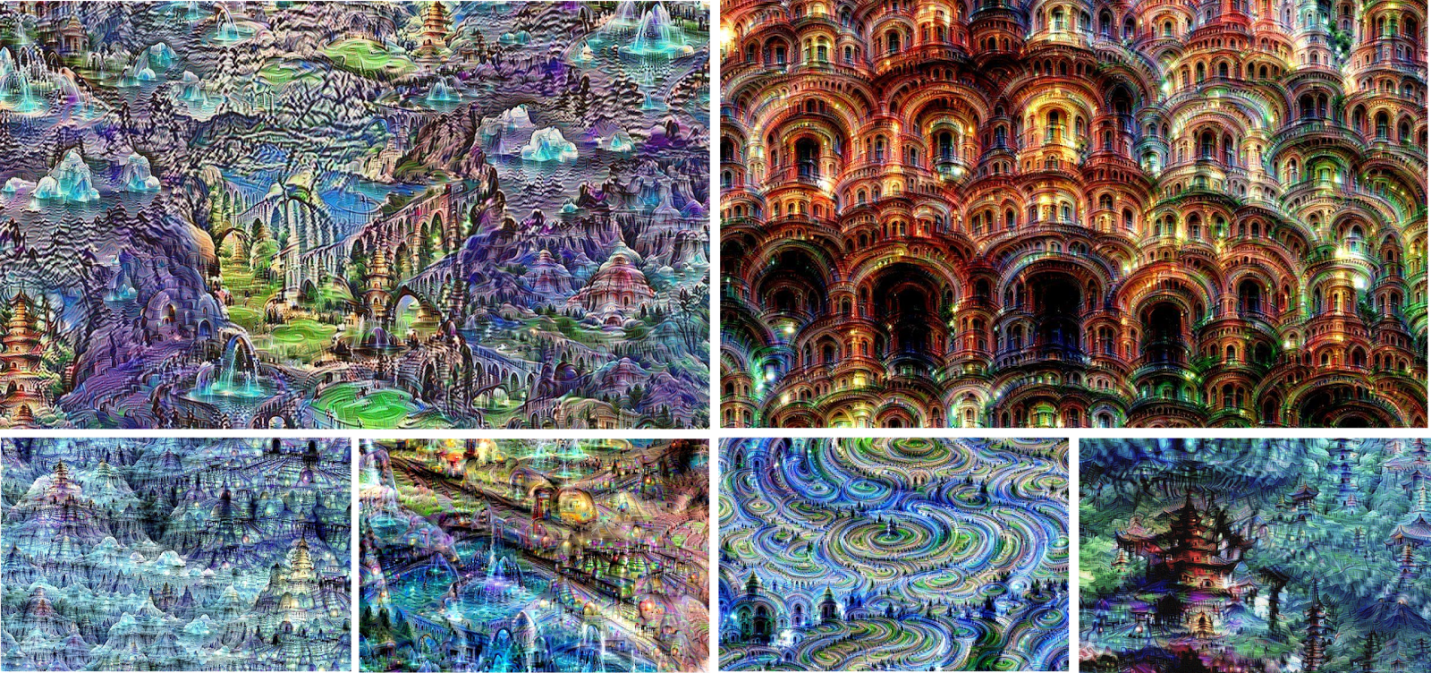

The researchers went a step further and applied their neural networks to random noise images. The network’s extracted output was then sent through the algorithm again and again so that “the result becomes purely the result of the neural network,” making for some vibrant original images. This in turn led the bloggers to philosophically conclude that perhaps these networks could become a tool for artists as a “new way to remix visual concepts—or perhaps even shed a little light on the roots of the creative process in general.”

(Photo via Google Research Blog)

This is not surprising—nor is it a singular achievement of deep learning research. Google has multiple research initiatives in place with the objective of furthering artificial intelligence and robotics, as evidenced by its stream of research-based acquisitions from 2013-2014.

An example of two Google AI projects that are part of DeepMind (a $400 million Google acquisition of last year, the objective of which is “to solve intelligence”) are: (1) using the volumes of Daily Mail and CNN articles stored online to hone its neural networks in natural language processing, and (2) teaching deep learning machines with the ability to “learn” from its mistakes and master Atari video games.

And these projects are proof in themselves of one of Google’s ten core beliefs: “You can make money without doing evil.” (This is adapted from Google’s former but retired slogan: “Do no evil.”) The results of these projects can be directly translated into ways to make search features on Google and YouTube increasingly intuitive, responsive to context, and aligned with how we think so as to make technology feel more “natural” to use. (They can also directly feed into initiatives of Google X—a somewhat hush-hush facility for exploring “science fiction-sounding solutions.”)

For all those who are still skeptical about artificial intelligence and learning, it may be of some comfort that Google and most technologists express humanistic intentions, and they arguably progress at the fastest pace to make humanity better for us all. When asked about the possibility of machines with moral autonomy (the implication being that such machines would make choices we find immoral, which in turn implies that human nature is inherently corrupted), DeepMind cofounder Demis Hassabis told Wired UK:

Of course we can stop it—we’re designing these things. Obviously we don’t want these things to happen. We’ve ruled out using our technology in any military or intelligence applications. What’s the alternative scenario? A moratorium on all AI work? What else can I say except extremely well-intentioned, intelligent, thoughtful people want to create something that could have incredible powers of good for society, and you’re telling me there’s people who don’t work on these things and don’t fully understand.

And as long as Google remains sincere, well-intentioned, and intelligent, I don’t mind supporting these advancements while not fully understanding their work because I know they’re based on scientific research, strategic thinking, and humanistic aims. (Though I hope my children and grandchildren will fully get it—which is a whole other conversation on how education itself ought to work in order to keep up!)