Artificial Intelligence Shouldn’t Be Feared, But We Should Keep an Eye on Its Architects AHA interviews AI expert and technology futurist Dale Kutnick

Photo by Possessed Photography on Unsplash

Photo by Possessed Photography on Unsplash AHA’s David Reinbold recently sat down with technology futurist, philanthropist, and investor Dale Kutnick to talk about artificial intelligence, its impacts on humanists–and more broadly, humanity–as well as what some of its possible applications will be as we look toward the future.

Kutnick recently retired as SVP & Distinguished Analyst at Gartner, Inc., after spending forty-seven years in the IT research advisory business in senior management and research director positions. He also co-founded two companies in the research business (Yankee Group and META Group; the latter went public in 1995). Kutnick has been on numerous Boards of Directors and technology advisory boards for private, public and nonprofit companies. He previously served on technology advisory boards at Danbury Hospital, Sanofi, Lux Research, Ipsen; and is currently on the advisory board of the World Food Program and on the Board and Executive Committee of Riverkeeper. He is a graduate of Yale University.

Read their Q&A Interview below:

David Reinbold: The principles of humanism emphasize values like reason, compassion, altruism, and more. Trying to lead a life where human freedom and ethical responsibility are natural aspirations for everyone. Does AI technology align with these principles?

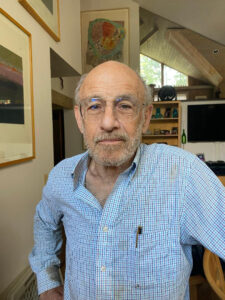

Dale Kutnick

Dale Kutnick: AI is neutral on most, if not all, human-driven principles. It can be used to dramatically advance homo sapiens’ knowledge, efficiencies, lifestyles and the planet, or be exploited to enrich its purveyors and exploiters. But in general, knowledge advancement, science, tech-driven efficiencies and more data-based decisions and judgements should improve the distribution of the planets’ resources, and therefore improve the lives of more humans (and other species) on the planet. In sum, I believe that advanced technology will enable us (eventually) to solve the climate degradation problem, as well as the planet resource sharing constraints we are experiencing. Many would call this techno-optimism.

Reinbold: Where might AI and the principles of humanism diverge?

Kutnick: Primarily when science and tech are exploited by a relative few for their own selfish (e.g. capitalist, enriching) motives and aggrandizement. For instance, will genetic engineering advancements–human enhancement, elimination of certain diseases, genetic programming of specific enhanced characteristics (oxygen uptake, fast-twitch muscles, elimination of genetic disorders like diabetes)–be regulated and/or priced so that they are only available/affordable for the wealthy, or will they be democratized? This is a sociopolitical question more than a tech/science one. Again, AI is neutral on ethics, freedom, choice, human aspirations, etc. It can be “influenced” or “guided” or driven by its purveyors’ data set choices, biases, goals and perspectives. AI is and will become a huge potential competitive advantage tool for some companies and individuals, similar to genetic engineering; out with the “bad” genes that cause debilitating conditions, in with those that enhance our physical and mental prowess, ability to survive/mate and succeed.

Reinbold: AI has the potential to significantly impact various aspects of society, including employment, healthcare and education. How do you see AI technologies influencing notions of justice and fairness in these domains?

Kutnick: AI–and tech, in general–can be used to significantly advance the justice and fairness of society by helping to provide more equitable resource distribution and more resource availability. This will give us improved, more efficient production and distribution of “The 8 Basics of 21st Century Society,” which is akin to Maslow’s “Hierarchy of Human Needs,” updated for the 21st Century: 1. Food/water/nourishment; 2. Clothing/basic appurtenances; 3. Shelter; 4. Healthcare/wellness; 5. Energy; 6. Education; 7. Physical safety; 8. Info-tainment (high-speed internet).

Agritech, technology and other efficiencies and advancements, as well as developed societies’ falling birth rates made Paul Ehrlich’s (1950s) Population Bomb dire predictions (widespread famine by the 90s) prove to be totally wrong. The planet’s absolute poverty rate (mostly due to China’s dramatic improvements, but also some others) has fallen significantly during the past sixty years, as has under- and malnourishment, childhood mortality (due to better nourishment and vaccines, etc.), and disease treatment and prevention (TB, polio, malaria, etc.). There is plenty of food for the entire planet, if we curtailed our use of food (such as corn and others) to make biofuels, and could transport surpluses to war-torn areas.

Reinbold: One of the concerns surrounding AI is the potential for biases in algorithms, which can lead to unfair outcomes, discrimination and perpetuation of existing societal inequalities. How do you respond to that?

Kutnick: Absolutely true. Again, AI “itself” is neutral on these issues, like addressing bias, discrimination, and ultimately repudiation, for insurance, work, healthcare, etc. It is a powerful tool, akin to nuclear fission or fusion, and as Oppenheimer and Einstein and many others have noted; nuclear energy can be exploited to provide virtually free (other than high operating costs), potentially nonpolluting energy, if managed properly. Or that energy could be used to destroy the planet.

AI and tech can and will be exploited by capitalists and governments for competitive advantage (building into the algorithms and collecting data to drive their biases and intentions–control of outcomes, etc.). AI can’t fix humans’ underlying psychosocial and evolved self-preservation issues (such as our competitive biases linked to natural selection and competition for survival) unless we direct it to do so. But there are too many bad actors, including some governments, that will use AI to increase their power and hegemony.

Reinbold: How can we ensure that AI systems are developed and deployed ethically to uphold humanist values?

Kutnick: Unfortunately, we cannot. AI software and algorithms are already too ubiquitous. Many are open source and widely available – and spreading like lava flow from a volcano. Controlling the burgeoning data, especially the sharing (and exploiting) of personal data and data sets (the collections of data for specific purposes), managing biases (at a government level) and regulating, insinuating or legislating specific targets and outcomes (such as more humanistic values) is a tall request. We’d need to first ask whose humanist values we are trying to uphold. There is significant cognitive dissonance on that issue between various factions.

Who controls the data is the existential question–and there are only three choices (and some combinations of them).

- In China, it is obviously the government, which doles out some “concessions” to “well behaved” capitalists, like Alibaba, Huawei, Tencent or TikTok;

- In the United States, it is primarily the capitalists (with some government regulations, and of course, the CIA, NSA, NSC, and others get what they want sub rosa), and in Europe it is a hybrid of the two approaches and most governments claim they don’t collect the data;

- Individuals control their own data such as on a blockchain, which sounds great, but people are too easily duped, bribed, coerced, exploited, quixotic, etc., and often driven by convenience or expedience or both, so, in general, number three is a non-starter for most.

Reinbold: As humanists specifically–and nontheists generally–we aspire to lead ethical lives that are “Good Without a God.” Many folks in society derive their ideas about ethics and morality through a religious lens. How do you see AI technology intersecting with religious values and beliefs?

Kutnick: At its core, AI has no values or beliefs, per se. It can certainly evolve these based on data it selects (or is selected by specific humans), digests, weights, and packages into data sets (which have all sorts of biases) that will be considered and “weighted” against any biases that are incorporated.

Reinbold: What challenges might arise in reconciling AI with various religious perspectives?

Kutnick: Biases in the data sets considered, if an AI only had the Old Testament or the Koran as its primary sources of data or it was instructed to give these higher weightings, it would deliver results that would be quite contradictory to those of scientifically-driven atheists (or apatheists, like me).

Reinbold: Some argue that AI could challenge the concept of human uniqueness and our traditional understanding of consciousness. What are your thoughts on this?

Kutnick: We are already there for some of us. Many “scientific atheists” (and derivatives) think of consciousness as a human invention for explaining our place and hegemony on the planet. For me, and many others, “consciousness” is nothing more than humanities’ egocentric anthropomorphic self-awareness that manifests in psychobabble to distinguish humans from animals, plants, rocks, elements, and raw DNA (such as viruses).

So “consciousness” is nothing more than a second and third derivative from the combination of our senses’ data collection and precipitating actions for survival (for example: fight or flight, or enjoy the drink or meal, or this is a fine mate to promulgate my genetic material). This is too often linked to procreation (e.g. survival), which is often driven by our interpretation of the physical world we inhabit and adapt to/for our needs; competition for scarce resources drives this. But what if resources are no longer scarce?

AI’s are already passing the “Turing Test” (meaning they are indistinguishable from humans answering similar questions) and surpassing humans’ capabilities in increasingly complex activities. Some AIs have already declared themselves to be “conscious.”

Reinbold: How do you see AI affecting our understanding of what it means to be human and the principles of humanism?

Kutnick: Well, in addition to AI, I would also add genetic engineering, material sciences, sustainable energy transitions, transhumanism, computer-brain interfaces, etc. into your question. That will all be impacted by, and impact AI evolution and exploitation. Homo sapiens’ next evolution; some of us believe that the current Anthropocene epoch will soon be giving way to a new superior subspecies – CyberBioSapiens.

CyberBioSapiens are an “evolved” subspecies that is the synergistic integration of intelligent sensors and prosthetics, processors, memory, wireless connections and more, driven by AI software; they would also have genetically engineered “modifications” against most genetically inherited diseases and conditions, likely possess some “enhancements,” and finally, some parts of the human genome would be utilized.

This is already well underway (“intelligent” artificial body parts, genetic “reprogramming,” robotics, etc.) Some scientists, like Ray Kurzweil, and writers like Yuval Harari believe this evolution will culminate in homo sapiens “disappearance” within 100-150ish years. So, AI and derivatives will be as cataclysmic as Galileo’s heresy that the Earth orbited the sun; Columbus’ proving that the Earth wasn’t/isn’t flat; Darwinian evolution; Pasteur’s “discovery” of germs/bacteria; Einstein’s theory of relativity and E=MC2; etc.

Reinbold: The ethical dilemmas presented by AI are complex, nuanced and evolving. How can individuals, organizations, and policymakers stay informed and adapt to these challenges to ensure that AI technologies benefit humanity in alignment with humanist principles?

Kutnick: We need to stop putting our individual and collective heads in the sand and pretending that this evolution isn’t happening. And we must stop the fear mongering about it. We need to continuously educate ourselves in the science and technologies that are increasingly impacting our world and keep learning.

We must explore opportunities for utilizing AI software and adopt it, where appropriate, as a new tool in our daily lives. AI can improve our business acumen, increase the depth of our knowledge and understanding of many topics; solve difficult problems; improve our health and wellness; increase our efficiency; and much more.

We must become more adept at managing our own data (and digital persona) and information so that we can better secure it and utilize it appropriately. We should lobby the government and lawmakers to pass laws restricting the exploitative use of our personal data without gaining our consent.

We should try to ensure that AI is more fully utilized to improve the lives of homo sapiens on a more equal basis (providing the “8 Basics” I referenced earlier).

We should encourage more and deeper research into AI and its derivatives, especially drug developments, genetic engineering, robotics, material sciences, energy production and storage, better food and water production and management, better education, etc.

Reinbold: Looking to the future, what are your recommendations for researchers, developers and policymakers to navigate the ethical landscape of AI and its relationship with humanism effectively?

Kutnick: Beyond the points I already made, I believe we must ensure that more people understand and appreciate the power and potential of AI to dramatically improve the lives of citizens on our planet – again, to better provide the “Basic 8” to everyone I referenced earlier. We can use AI as a tool to reduce suffering among a larger percentage of Earth’s citizens who’ve been left out of advances; to better balance the human footprint and usurpation of planet resources (more and better use of renewable energy); to improve health (new devices, treatments, genetic engineering) for everyone. Hopefully, these more altruistic, empathetic perspectives can be incorporated into future AI endeavors instead of merely increasing the wealth and control of capitalists and various governments.

Reinbold: Any additional thoughts you’d like to add about AI and humanism?

Kutnick: As a pragmatic techno-optimist, I believe that we should be making a more concerted effort to exploit advanced technologies to address the current and upcoming (mostly human induced) existential crises for all homo sapiens (as well as the flora and fauna that inhabit our planet): climate change, hunger, access to fresh water, eliminating plastic and other pollution, better sanitation, access to healthcare/wellness, education, safety, and so much more.